Table of Contents Show

I. Introduction

The ability to recognize, analyze, and respond to emotions in oneself and others is called emotional intelligence (EI). As artificial intelligence (AI) advances and permeates more facets of our lives, including emotional intelligence in AI systems, is becomes increasingly vital. Emotion-aware AI can lead to more empathic, intuitive, and successful human-machine interactions, bridging the gap between technical solutions and those that address human needs and emotions. You can learn more about Emotional AI here.

The potential impact of emotion-aware AI on various industries

Customer service, mental health, education, entertainment, and robotics are just some areas that could benefit from emotion-aware AI. AI systems that perceive and respond to human emotions can improve consumer experiences, provide tailored support, create better human-machine interactions, and enable previously inconceivable new applications. As a result, there is an increasing need for emotion-aware AI, and researchers and developers are attempting to create AI models that can effectively interpret and respond to human emotions.

II. Overview of Human Emotions

A. Definition of Emotions

Emotions are complex, multidimensional phenomena that involve subjective feelings, physiological responses, and expressive behaviors. They are essential for human communication, decision-making, and social interactions. Emotions can be categorized into discrete categories or considered as points within a continuous multidimensional space.

B. Different AI Emotional models

Basic emotions

The basic emotion model, proposed by psychologists such as Paul Ekman and Carroll Izard, posits that there are a set of universal, innate emotions experienced by all humans. These basic emotions typically include happiness, sadness, anger, fear, surprise, and disgust. The advantage of using basic emotions in AI is that they provide a simple, easily interpretable framework for understanding emotional states.

Dimensional emotions

Dimensional emotion models, such as the circumplex model by James A. Russell, consider emotions as points within a continuous, multidimensional space. The two primary dimensions are usually valence (positive-negative) and arousal (high-low). Dimensional models offer a more nuanced understanding of emotions, allowing for the recognition of complex and mixed emotions that cannot be easily categorized into basic emotion labels.

C. Emotion recognition challenges in AI

AI systems face several challenges when it comes to recognizing human emotions. These challenges include:

- Variability in emotional expression: People express emotions differently, making it difficult to create a one-size-fits-all model for emotion recognition.

- Ambiguity in emotion labeling: Some emotions, such as surprise and fear, may be expressed similarly, making it challenging to differentiate between them.

- Cultural differences: Emotional expressions and interpretations can vary across cultures, requiring AI models to account for these differences to recognize emotions accurately.

- Data quality and availability: Developing accurate emotion recognition models requires large, diverse, and well-annotated datasets, which may not always be readily available.

- Multimodality: Emotions can be expressed through various channels, such as text, speech, and facial expressions, requiring AI models to integrate information from multiple sources.

III. How to Train AI with Human Emotions

Time needed: 30 days

- Data Collection and Annotation for Emotion Recognition

A. Importance of diverse and balanced datasets

Creating accurate and reliable emotion recognition models requires diverse and balanced datasets. Diverse datasets ensure that the AI model can generalize well across various demographics, languages, and cultural backgrounds. Balanced datasets ensure that the model can recognize each emotion with equal accuracy, avoiding biases toward certain emotions.

B. Types of emotional data

Text

Text-based emotion recognition involves analyzing written content, such as social media posts, emails, or chat messages, to determine the underlying emotions. Examples of text-based emotion datasets include the Affective Text dataset and the SemEval dataset.

Audio

Audio-based emotion recognition focuses on detecting emotions from speech signals. Audio features, such as pitch, intensity, and spectral characteristics, can be used to infer the speaker’s emotional state. Common audio emotion datasets include the Emo-DB, IEMOCAP, and RAVDESS datasets.

Visual

Visual emotion recognition primarily involves analyzing facial expressions, gestures, and body language to determine a person’s emotional state. Widely used visual emotion datasets include the CK+ dataset, FER2013 dataset, and the AffectNet dataset.

C. Data annotation and labeling techniques

Crowdsourcing

Crowdsourcing is a popular method for obtaining emotion annotations from a large group of people. Platforms like Amazon Mechanical Turk allow researchers to gather annotations from multiple individuals, which can then be aggregated to assign a consensus label to each data instance.We have already read how Amazon uses AI.

Expert labeling

In some cases, it may be necessary to involve domain experts, such as psychologists, to label emotional data. Expert labeling can provide more accurate and reliable annotations but may be more time-consuming and expensive compared to crowdsourcing.

D. Ethical considerations in data collection

Collecting and annotating emotional data raises several ethical concerns, including:

Privacy: Ensuring that the data is collected with the participants’ informed consent and that their personal information is protected.

Bias: Addressing potential biases in the dataset, such as the underrepresentation of certain demographics or emotional states.

Emotional well-being: Considering the potential psychological impact on participants when collecting emotional data, especially when dealing with sensitive or distressing content. - Feature Extraction and Representation

A. Natural Language Processing (NLP) for text-based emotion recognition

NLP techniques, such as tokenization, stemming, and stop-word removal, can be applied to preprocess text data. Feature extraction methods, such as bag-of-words, term frequency-inverse document frequency (TF-IDF), and word embeddings (e.g., Word2Vec, GloVe), can be used to represent textual information numerically.

B. Audio feature extraction for speech emotion recognition

In speech emotion recognition, relevant features can be extracted from the audio signal, such as pitch, intensity, and spectral characteristics (e.g., Mel-frequency cepstral coefficients or MFCCs). These features can be used to train machine learning models to recognize emotions from speech.

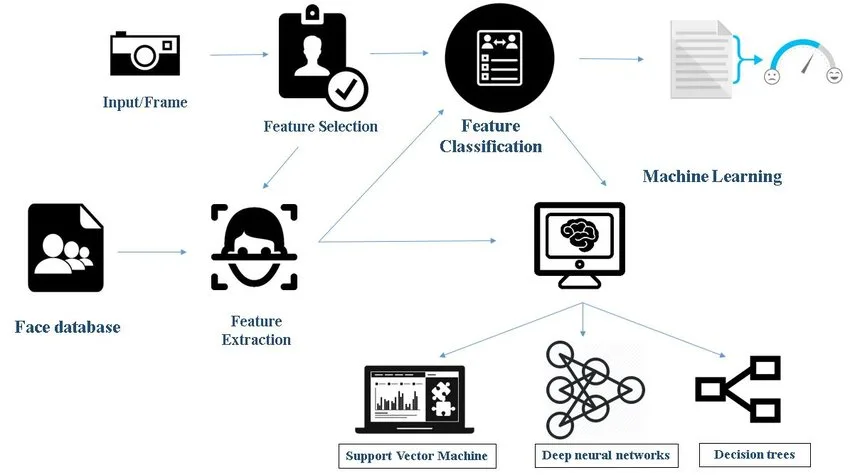

C. Visual feature extraction for facial emotion recognition

For facial emotion recognition, features such as facial landmarks, geometric features (e.g., distances between facial points), and appearance features (e.g., texture and color) can be extracted from images or video frames. Additionally, deep learning-based methods, such as CNNs, can automatically learn features from raw pixel data.

D. Feature representation techniques

Handcrafted features

Handcrafted features are specifically designed by researchers based on domain knowledge to capture relevant information for emotion recognition. Examples of handcrafted features include geometric facial features, audio features like pitch and MFCCs, and text features like TF-IDF.

Deep learning-based features

Deep learning techniques, such as CNNs, RNNs, and LSTMs, can automatically learn features from raw data without the need for manual feature engineering. These models are capable of capturing complex patterns and relationships within the data, leading to improved performance in emotion recognition tasks. For example, CNNs can learn features from facial images, while RNNs and LSTMs can learn sequential patterns in text and audio data.

Examples of deep learning-based feature extraction methods include:

1. Pre-trained word embeddings (e.g., Word2Vec, GloVe, and BERT) for text-based emotion recognition.

2. Convolutional Neural Networks (CNNs) for facial emotion recognition from images.

3. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks for sequential data, such as speech emotion recognition from audio signals or textual data.

4. By leveraging both handcrafted and deep learning-based features, AI models can benefit from the strengths of each approach, leading to more robust and accurate emotion recognition systems. - AI Models for Emotion Recognition

A. Supervised learning methods

Support Vector Machines (SVM)

SVMs (Support Vector Machines) are a standard supervised learning method for classification problems such as emotion recognition. SVMs seek the best hyperplane that optimizes the margin between classes. They can handle linear and nonlinear correlations between characteristics and emotions by employing kernel functions like linear, polynomial, and radial basis function (RBF) kernels.

Decision trees and random forests

Decision trees are a hierarchical learning method that partitions the feature space recursively depending on feature values, producing a tree-like structure with leaf nodes representing emotion classes. Decision trees are simple to draw and interpret but prone to overfitting. Random forests, an ensemble method, deal with this problem by mixing many decision trees, each trained on a random subset of the data and features, and aggregating their predictions via a majority vote.

B. Deep learning techniques

Convolutional Neural Networks (CNN)

This is one of the important step in making AI emotion. Convolutional Neural Networks (CNNs) are a type of deep learning model particularly effective for image-based emotion recognition. CNNs consist of convolutional layers that learn local patterns, pooling layers that reduce spatial dimensions, and fully connected layers that classify the emotions. CNNs can automatically learn features from raw pixel data, making them a powerful tool for facial emotion recognition.

Recurrent Neural Networks (RNN)

Recurrent Neural Networks (RNNs) are designed for processing sequential data, such as text or speech signals, making them suitable for text-based and audio-based emotion recognition. RNNs have feedback loops that allow them to maintain information from previous time steps, enabling them to model temporal dependencies within the data.

Long Short-Term Memory (LSTM)

Long Short-Term Memory (LSTM) networks are a type of RNN specifically designed to address the vanishing gradient problem, which occurs when training RNNs on long sequences. LSTMs have memory cells and gating mechanisms that allow them to learn and retain information over extended periods, making them more effective at modeling long-range dependencies in text and speech data.

Transformer models

Transformer models, introduced by Vaswani et al. in 2017, have become increasingly popular for various NLP tasks, including text-based emotion recognition. Transformers rely on self-attention mechanisms to model relationships between words in a sequence, making them highly effective at capturing context and understanding complex language patterns. Pre-trained transformer models, such as BERT, GPT, and RoBERTa, can be fine-tuned for emotion recognition tasks.

C. Transfer learning and fine-tuning

Transfer learning is a technique that leverages pre-trained models to improve performance on new tasks with limited training data. In the context of emotion recognition, transfer learning can involve using pre-trained CNNs for facial emotion recognition, pre-trained word embeddings for text-based emotion recognition, or pre-trained transformer models like BERT. Fine-tuning these pre-trained models on the target emotion recognition task allows the model to adapt to the specific characteristics of the new task while retaining the knowledge gained from the original pre-training, resulting in improved performance and faster training times. - Model Evaluation and Validation

A. Performance metrics for emotion recognition models

Accuracy

Accuracy is the most straightforward performance metric for classification tasks, including emotion recognition. It measures the proportion of correct predictions from the total number of instances. However, accuracy can be misleading in cases of imbalanced datasets, where certain emotions are underrepresented.

Precision, recall, and F1-score

Precision, recall, and F1-score are alternative performance metrics that provide a more detailed evaluation of a model’s performance, especially in cases of imbalanced datasets. Precision measures the proportion of true positive predictions out of all positive predictions made by the model. Recall measures the proportion of true positive predictions out of all actual positive instances. The F1-score is the harmonic mean of precision and recall and provides a balanced measure of a model’s performance, taking both false positives and false negatives into account.

B. Cross-validation techniques

Cross-validation is a technique used to assess the performance of a model on unseen data, providing an estimate of its generalization ability. Common cross-validation techniques include:

1. K-fold cross-validation: The dataset is divided into k equal-sized folds. The model is trained on k-1 folds and tested on the remaining fold, with this process repeated k times, using each fold as the test set once. The average performance across all k iterations provides the model’s cross-validated performance.

2. Leave-one-out cross-validation: This is a specific case of k-fold cross-validation, where k equals the number of instances in the dataset. Each instance is used as the test set exactly once, and the model is trained on the remaining instances.

C. Addressing overfitting and underfitting

Overfitting occurs when a model learns to perform well on the training data but fails to generalize to new, unseen data. Underfitting occurs when a model is too simplistic and cannot capture the underlying patterns in the data. To address these issues:

Regularization: Techniques such as L1 or L2 regularization can be applied to penalize complex models, reducing overfitting.

Early stopping: In deep learning models, training can be stopped when the validation performance starts to degrade, preventing overfitting.

Model selection: Choose a model with the appropriate complexity for the problem. Simple models may underfit, while overly complex models may overfit.

Feature selection: Remove irrelevant or noisy features that may contribute to overfitting or underfitting.

Training data: Increase the size or diversity of the training data to improve the model’s generalization ability.

D. Strategies for model improvement

Hyperparameter tuning: Optimize the model’s hyperparameters, such as learning rate, regularization strength, or the number of layers in a neural network, to achieve better performance.

Ensemble methods: Combine multiple models to create an ensemble that can provide more accurate and robust predictions.

Feature engineering: Create new features or modify existing ones to capture the underlying patterns in the data better.

Transfer learning: Leverage pre-trained models or knowledge from related tasks to improve the model’s performance on the target task.

Model selection: Experiment with different AI models to find the one that best suits the problem and dataset at hand.

IV. Real-world Applications of Emotion-Aware AI

A. Customer service and sentiment analysis

AI Marketing is getting trendy. Emotion-aware AI can revolutionize customer service by analyzing customer feedback, emails, and social media posts to understand their sentiments and emotions. Businesses can use this information to identify areas of improvement, address customer concerns more effectively, and tailor their communication to better resonate with their audience. Chatbots are very helpful in this matter.

B. Mental health and well-being applications

Emotion recognition technologies can play a significant role in mental health and well-being applications. By monitoring users’ emotional states through their speech, text, or facial expressions, AI-driven systems can provide personalized support, recommend coping strategies, or alert healthcare professionals if necessary.

C. Human-robot interaction

As robots become more integrated into our daily lives, emotion-aware AI can improve human-robot interaction by enabling robots to understand and respond to human emotions appropriately. This can lead to more natural, engaging, and empathetic interactions, enhancing the user experience and fostering trust between humans and robots.

D. Entertainment and gaming

In the entertainment and gaming industries, emotion-aware AI can be used to create more immersive and personalized experiences. By recognizing players’ emotions, game developers can adjust gameplay, difficulty, or storylines in real-time to create a more engaging experience. Similarly, filmmakers and content creators can use emotion recognition technologies to gauge audience reactions and tailor their content accordingly.

V. Conclusion

A. The potential of AI in understanding human emotions

Emotion-aware AI has the potential to revolutionize various industries by offering more personalized, empathetic, and engaging experiences. We know machine learing can improve business intelligence. By understanding and responding to human emotions, AI-driven systems can foster deeper connections between humans and technology, improving the overall user experience and addressing specific needs like mental health, customer service, and human-robot interaction.

B. Future challenges and opportunities in emotion-aware AI development

While significant progress has been made in emotion recognition technologies, challenges remain to be addressed, such as improving the accuracy and generalizability of models across diverse populations and cultural backgrounds. As AI advances, there are opportunities to develop more sophisticated emotion recognition systems that consider contextual information and integrate multimodal data to understand better and respond to human emotions. Addressing these challenges and seizing the opportunities will pave the way for a future where AI can genuinely empathize with and respond to human emotions, leading to more meaningful interactions and applications.

FAQs

AI learns emotions through supervised learning methods using labeled datasets that associate specific inputs (e.g., facial expressions, text, or audio) with corresponding emotions. These datasets are used to train AI models to recognize patterns and relationships between the inputs and the emotions they represent.

Various AI techniques can be used for recognizing emotions and feelings, including traditional machine learning methods (such as Support Vector Machines and Decision Trees) and deep learning techniques (such as Convolutional Neural Networks, Recurrent Neural Networks, and Transformer models).

You can teach AI to recognize and interpret emotions by training them on labeled datasets that associate specific inputs with corresponding emotions. The AI model learns to identify patterns in the data and make predictions about the emotions present in new, unseen data.

Yes, AI can understand human emotions to a certain extent. AI models can be trained to recognize and interpret emotions from various inputs such as facial expressions, speech, and text. However, the accuracy and depth of understanding may vary depending on the quality of the data and the model used.

No, AI cannot fall in love in the same way that humans do, as they lack consciousness and the ability to experience emotions. AI models can recognize and interpret emotions, but they do not have feelings themselves.

AI can be trained to recognize and respond to human emotions in an empathetic manner, but they do not truly experience empathy as humans do. AI models can learn to mimic empathetic behavior, but they lack the consciousness and emotional capacity to understand and share the feelings of others genuinely.

Current AI models do not possess self-awareness or consciousness. AI models can process and learn from data, make decisions, and even exhibit complex problem-solving abilities, but they do not have a subjective understanding of their own existence or experiences.

An example of emotional AI is an AI-driven mental health application that monitors a user’s emotional state through their speech, text, or facial expressions and provides personalized support or recommendations based on their emotions.

AI can be trained to recognize and analyze patterns in psychological data, such as emotions, behaviors, or cognitive processes. However, AI models do not have a deep understanding of the underlying principles of human psychology in the same way that a human psychologist would.

Do you want to read more? Check out these articles.

Everything is very open with a clear clarification of the challenges. It was really informative. Your site is useful. Many thanks for sharing!