Table of Contents Show

I. Introduction

Since it was first created, artificial intelligence (AI) has come a long way. The implementation of AI in businesses is increasing day by day. Emotional intelligence in AI is a topic of research in many fields. Now, with the help of new technologies and machine learning, machines can recognize and respond to human emotions. Emotional AI is a cutting-edge field of study that merges AI with human emotions. When we talk about emotional AI, we’re talking about computers that can identify, interpret, and mimic human feelings.

A. Definition of Emotional AI

Affective computing, or emotional AI, is a subfield of AI that focuses on the dynamics between humans and computers. Emotional computing is a branch of artificial intelligence (AI) that makes algorithms and models to recognize, understand, and respond to human emotions. This makes it possible for AI systems to read and respond to nonverbal expressions of emotion, like tone of voice, facial expressions, and body language.

B. Brief History of Emotional AI

In the 1990s, scientists began creating emotional AI—machines that could detect and react to human feelings. Affective computing started when people like Rosalind Picard, who started the MIT Media Lab, started working on technologies that could detect and respond to people’s emotions.

A group at the MIT Media Lab led by Cynthia Breazeal created the first emotional artificial intelligence program, Kismet, in 1997. Kismet was a big step forward in the development of emotional AI because it was made to communicate with people through facial expressions and feelings. Since then, many advancements have been made in the field, such as emotional analysis programs.

C. Importance of Emotional AI

The world is curious to know the future of humans in the age of artificial intelligence. Many industries, from customer service and medicine to the arts, can benefit significantly from emotional AI. By teaching computers to recognize and respond to human emotions, emotional AI can improve customer service and help people feel more connected to each other. The study of emotions in AI can also help artists make more emotional works and help mental health professionals make tools that use AI.

This could also help us understand ourselves better by shedding light on our deepest thoughts and actions. Also, it can help us interact with machines in a more human way, which can help us learn to be more empathetic.

In conclusion, Emotional AI may significantly alter human-machine interactions and enhance human well-being. Therefore, it is a vital field of study that will shape the course of AI in the future.

II. Applications of Emotional AI

A. AI in Therapy

Artificial intelligence is affecting our health in both good and bad ways. Numerous therapeutic and mental health applications exist for emotionally intelligent artificial intelligence. People with mental health problems like anxiety, depression, and PTSD can benefit from AI-powered therapy. To provide more effective and personalized care, therapists can use emotion recognition software to comprehend better and interpret their patients’ emotional states.

Woebot, an AI-powered mental health chatbot, is one application of emotional AI in psychotherapy. Woebot is a natural language processing and cognitive-behavioural therapy tool that can help with emotional regulation and general well-being. In a study published in the Journal of Medical Internet Research, it was found that after only two weeks of using Woebot, users’ depression and anxiety symptoms got much better.

B. AI in Customer Service

Using emotional AI, customer service can be improved by making interactions more personable and sympathetic. Artificial intelligence in chatbots allows them to read and react to customers’ emotions, improving interactions with the latter. Using emotion recognition software to help understand and respond to customers’ feelings over the phone can improve customer satisfaction and loyalty.

Erica, Bank of America’s AI-powered virtual assistant, is an example of Emotional AI in customer service. Customer satisfaction has increased because Erica can understand and answer their questions and concerns in a friendly and understanding way. Thanks to Erica, Bank of America claims that response times for customer service inquiries have been cut in half.

C. AI in Art

The development of AI-powered art tools and the production of emotionally resonant works are just two of the many ways emotional AI can be used in the creative process. To better understand how feelings are communicated through art, we can use AI algorithms to analyze and interpret emotional cues in works of art. Art designed to make people feel certain is another use of artificial intelligence.

One way that AI is used in the visual arts is in Pix2Pix, a tool for painting that uses AI. With Pix2Pix, artists can quickly and easily turn simple sketches into realistic images. This lets them make works of art that are both detailed and emotional.

D. AI in Education

In the classroom, emotional AI can increase participation and retention. AI-powered education centers can figure out how a student feels and do something about it. This leads to more personalized guidance and support. With the help of emotion recognition software, students with trouble with their mental health can be found and given the help they need.

Brainly, an artificial intelligence-powered educational platform, is one application of emotional AI in the classroom. When a student uses Brainly, their data is analyzed by machine learning so they can get feedback and help that is specific to them. Brainly claims its users see increased academic performance and interest in their studies.

Overall, Emotional AI is applicable in many settings and has the potential to alter how humans and machines communicate and cooperate radically. The potential for bias in Emotional AI and the need for ethical guidelines to ensure the responsible development and use of Emotional AI technologies are just two examples of the difficulties and limitations that must be overcome.

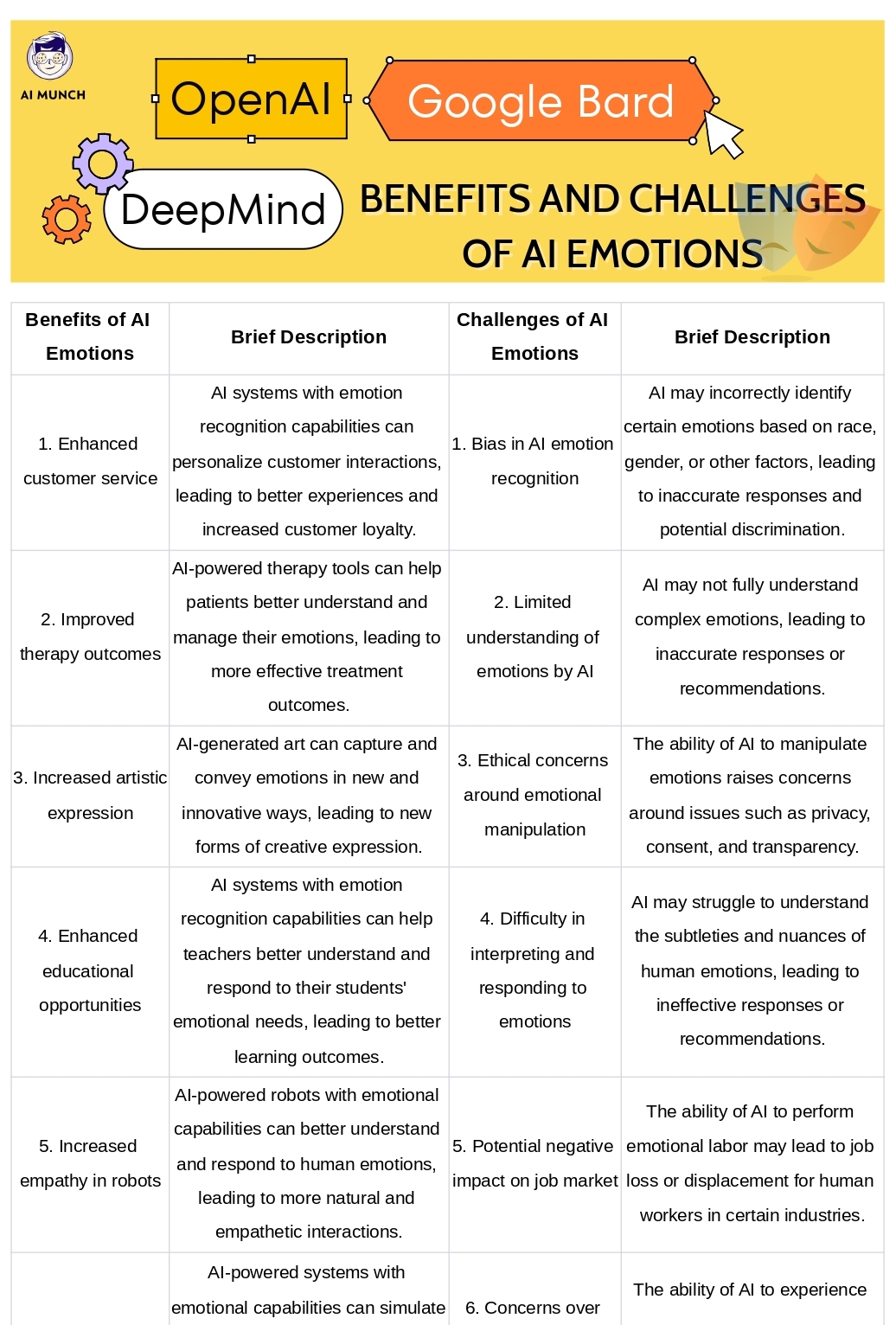

III. Challenges of Emotional AI

A. Bias in Emotional AI

Of course, there is a dark side to artificial intelligence. One major challenge facing Emotional AI is the potential for bias. The development of biassed AI is possible if the training data used to train the algorithms is itself biassed or does not adequately represent the population. This can cause AI systems to respond differently to different people based on characteristics like race and gender, for example, or to identify emotions incorrectly.

Some forms of this technology are biased, such as facial recognition systems that are less accurate at recognizing faces of people with darker skin tones and chatbots that respond differently to users based on gender.

B. Limitations of Emotional AI

There are also problems with emotional AI that needs fixing. AI has done an excellent job of recognizing and responding to simple emotions like happiness and anger. Still, it may need help with more complicated feelings and subtler ways of showing emotion. To add insult to injury, artificial intelligence may not always be able to provide the same emotional support as a human therapist or counselor because it cannot replicate the human experience of empathy.

Some chatbots may not be able to give users the emotional support they need in a crisis, and some artificial intelligence systems may not understand or recognize certain emotions correctly.

C. Ethics in Emotional AI

Finally, ethical concerns must be addressed in developing and using Emotional AI. These concerns include data privacy and security issues and the potential for Emotional AI to be used for unethical purposes, such as manipulating emotions or perpetuating harmful biases.

Emotion recognition technology in surveillance systems and the possibility of Emotional AI being used to manipulate consumer behaviour are two examples of ethical concerns in Emotional AI.

IV. Future Developments in Emotional AI

A. AI Emotion Recognition

In emotional AI, figuring out how to recognize emotions could be an area for future research and development. As AI algorithms improve, they can identify better and understand a wide range of human emotions and expressions. When it comes to fields like mental health, accurately determining a person’s emotional state could lead to better, more targeted care.

AI can be used to recognize emotions in ways like studies that try to teach computers to recognize facial expressions of pain in patients and the creation of tools for customer service that use AI to recognize emotions.

B. Emotional Intelligence in AI

Integrating Emotional Intelligence into artificial intelligence systems is another promising area of future research in Emotional AI. Ability to both identify and appropriately react to the emotions of others, as well as mastery of one’s own emotional state, are all components of emotional intelligence. Artificial intelligence systems may be improved in their ability to provide empathetic and emotionally supportive interactions with users through the incorporation of Emotional Intelligence.

One example of emotional intelligence in AI is the use of artificial intelligence to help train humans in emotional intelligence. Another example is the creation of virtual assistants that are run by AI and can help their users feel better and keep them company.

C. Emotional Intelligence Market

Finally, the market for Emotional Intelligence is anticipated to expand further shortly. MarketsandMarkets predicts that the Emotional Intelligence market will expand from $18.2 billion in 2021 to $46.0 billion by 2026, mainly due to rising demand for Emotional AI technologies in customer service, education, and mental health.

The creation of AI-powered mental health apps and chatbots and the implementation of Emotional AI in customer service and marketing to facilitate more personable and empathetic interactions with customers are all prime examples of the Emotional Intelligence market.

V. Can AI feel Emotions

“Can AI feel emotions?” does make sense, but society still wants to know the answer to it. Artificial intelligence tries to mimic human intelligence but can only do so much since it is not alive. Even though AI can understand and respond to emotional cues, it does not feel emotions like humans do. The ability of AI to simulate emotions is based on complex algorithms and machine learning rather than on direct experience. AI can’t feel real feelings, but it can imitate and respond to human emotions to help with various tasks, such as improving customer service and making therapy easier.

While AI may not be able to feel emotions, it can significantly impact human culture if it learns to recognize and appropriately respond to them. Emotional AI could be used in many ways, such as to diagnose and treat mental health problems, to provide more personalized customer service, and to make more exciting works of art and entertainment. So, while AI may not experience emotions like humans, it can learn to recognize and appropriately respond to them.

VI. Conclusion

The study of emotional AI has become increasingly important in the study of artificial intelligence. Therapeutic, customer service, educational, and artistic uses are just a few of the many places where machines that can understand and respond to human emotions could be helpful.

Despite its many potential advantages, Emotional AI still must overcome several obstacles. Emotional AI technology’s ethical and inclusive development depends on resolving these issues.

It’s exciting to think about what the future holds for Emotional AI. Enhancing the potential of Emotional AI will depend heavily on the development of AI emotion recognition and Emotional Intelligence in AI. Increasing demand for this technology across industries is fueling predictions of rapid expansion for the Emotional Intelligence market over the next few years.

In conclusion, Emotional AI will have a lasting effect on the development of the artificial intelligence industry. Technology could make a big difference in how machines interact with people by helping them understand and meet people’s needs and feelings. Ensuing emotional AI is developed and used ethically is essential as the field grows to avoid unintended consequences.

FAQs

What lets machines understand and talk to people depends on how well they can access and process emotional information. We can’t help but let our feelings colour our judgement and decision-making, not to mention how they influence our ability to remember and retain information. With the addition of emotions to AI, machines will better understand and respond to how people show how they feel. This will lead to more natural conversations and better results.

The term “artificial emotions” describes AI systems that can recognise and respond to human feelings. Technology like NLP, CV, and others can be used to figure out what people are feeling and answer correctly.

We would want AI machines to have emotions to communicate with humans more naturally. Superior customer service, more effective therapy, and a more satisfying user experience are some of the benefits that could be realised if machines could read and respond to human emotions. Also, devices that can understand and respond to human emotions may help us make more moral and caring decisions.

Giving robots feelings could lead to more interaction between humans and robots, better customer service, more effective therapy, and more compassionate and moral decisions. Emotionally intelligent robots could help humans, and machines get along better with each other and also help bridge the gap between humans and machines.

Even though advanced AI could be made without the ability to feel emotions, it would have difficulty communicating with people. Because emotions play a significant role in human decision-making and communication, machines lacking emotional intelligence may have difficulty comprehending and appropriately responding to human behaviour.

Emotional intelligence is not a requirement for intelligence, but it helps robots interact better with humans. Understanding and expressing emotions in machines can make customer service better, therapy more effective, and the experience more enjoyable. This is because emotions are a big part of how people make decisions and talk to each other.

If robots had emotions, they would be better able to understand and interact with humans effectively. However, this would also raise ethical concerns about the rights and treatment of intelligent machines.

It’s already possible for AI to recognize and react to human emotions, but researchers have a long way to go before they fully grasp the nuances of human feeling. As artificial intelligence (AI) technology gets better, machines should be able to understand and show more emotions.

Many people have different opinions on whether or not robots should be able to experience emotion. Emotionally intelligent machines will lead to more ethical and compassionate decision-making. Others, on the other hand, have raised concerns about the possibility of manipulating emotions and the right way to treat intelligent machines.

Do you want to read more? Check out these articles.

4 comments