I. Introduction

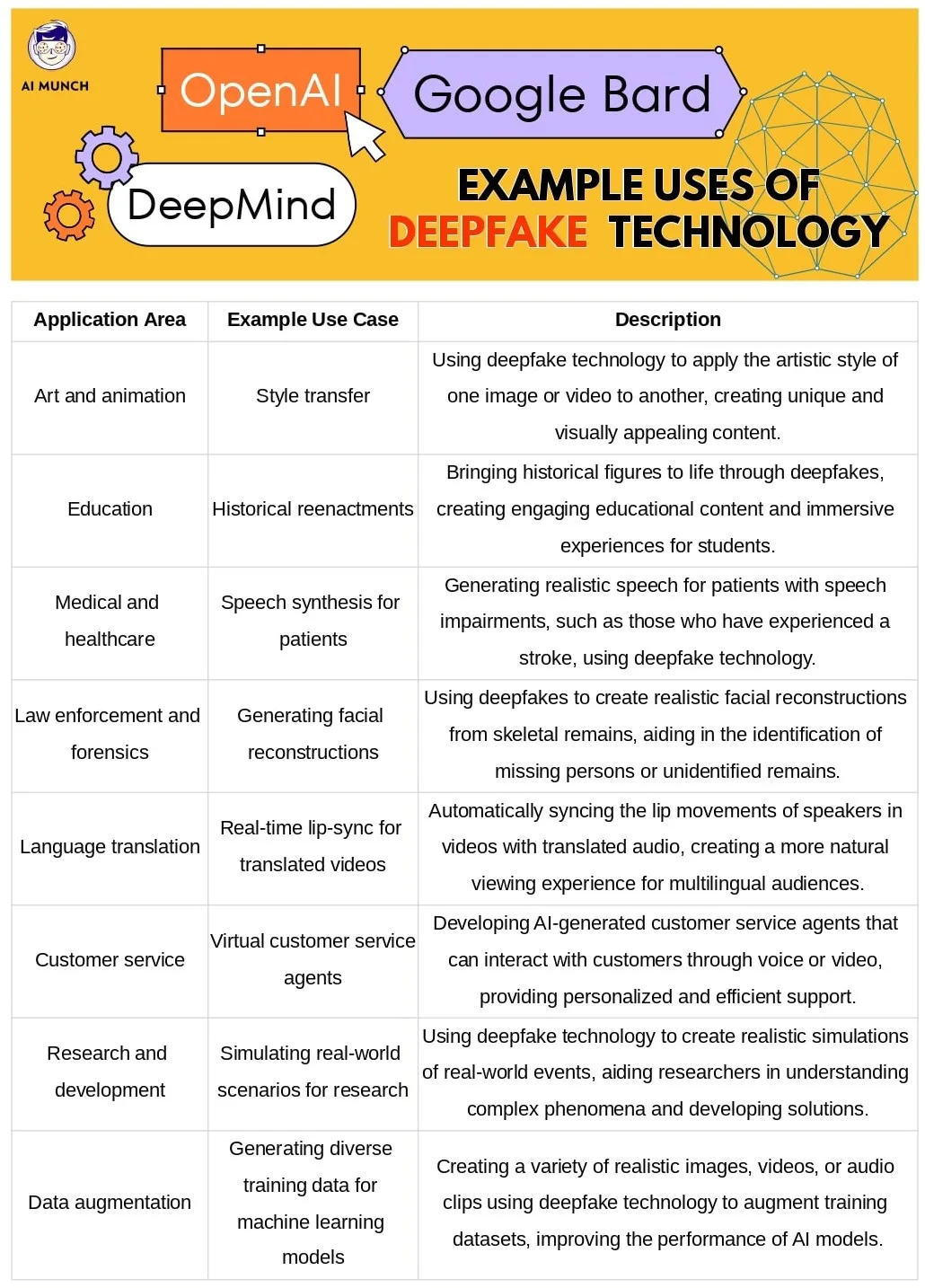

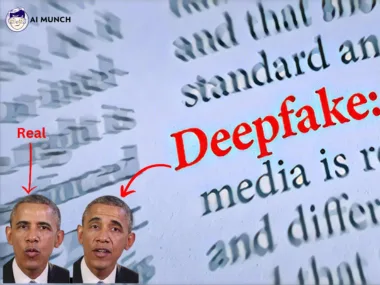

Artificial intelligence (AI) algorithms create deepfakes, a particular kind of synthetic media. These algorithms can create highly realistic images, videos, and audio files that mimic real people or new characters. The word “deepfake” is a combination of “deep learning,” a branch of artificial intelligence that uses neural networks, and “fake,” which describes the content that has been created artificially. Due to their potential to mislead, manipulate, and sway public opinion and their potential applications in various industries, deepfakes have drawn a lot of attention. To learn How Deepfake works, you can read here.

The Impact of Deepfakes on Media and Society

We know AI can be trained to understand Emotions. Deepfakes’ ascent has had a significant impact on both the media environment and society at large. They can muddy the lines between fact and fiction, making it harder and harder to believe that digital content is authentic. Deepfakes could spread misinformation, influence political elections, and damage people’s reputations because of this, raising concerns.

On the other hand, deepfakes have also given rise to new chances for innovation and artistic expression in the entertainment sector. Producers of movies and television shows can use deepfakes to revive actors who have passed away, create believable virtual characters, or age actors for flashback scenes. This helps in AI marketing as well. Deepfakes can also enable customized advertising campaigns and produce fake influencers on social media sites.

The importance of understanding Deepfake technology

Understanding the underlying technology and its implications is crucial as deepfakes become more complex and widespread. We can improve our methods for spotting and counteracting the harmful effects of deepfakes by investigating the algorithms and procedures used to create them. The ethical and legal issues posed by the use of AI-generated content can also be navigated with the aid of deepfake technology.

Increased media literacy and the public ability to distinguish between authentic and manipulated content can both be supported by increased awareness of deepfake technology. This is essential for maintaining public confidence in the media and limiting the spread of misinformation. In the end, we will be able to harness deepfakes’ beneficial applications while reducing the risks they pose to society if we thoroughly understand them and their potential impact.

II. How Deepfake AI Works

A. Generative adversarial networks (GANs)

The generator

The discriminator and the generator are the two main parts of generative adversarial networks. A neural network serves as the generator, producing fake data like images and videos. It learns the underlying patterns and features of the training dataset to take in random noise as input and convert it into realistic-looking content.

The discriminator

The discriminator evaluates the integrity of the generator’s content in another neural network. It has been taught to differentiate between actual data from the training set and fictitious data produced by the generator. The main objective of the discriminator is to accurately determine whether a given piece of content is real or fake.

Training process

Adversarial training involves simultaneously training the generator and discriminator. The discriminator wants to get better at spotting fake content, while the generator seeks to produce content that can deceive the discriminator. Both networks perform better over time as this process is repeated iteratively. As a result of the competition between the generator and discriminator, the generator eventually produces highly realistic deepfakes.

B. Autoencoders and Variational Autoencoders

Encoder-decoder architecture

Autoencoders are a type of neural network with an encoder-decoder architecture. The encoder compresses the input data into a lower-dimensional representation called the latent space. The decoder then reconstructs the input data from this latent representation. Autoencoders are trained to minimize the difference between the input data and the reconstructed output.

Latent space and feature extraction

The latent space is a compressed representation of the input data that captures the most essential features. In the context of deepfakes, these features might include facial expressions, speech patterns, or other distinguishing characteristics. By mapping input data to the latent space, autoencoders can effectively learn the underlying structure of the data.

Applications in deepfakes

Variational autoencoders (VAEs) are a type of autoencoder that introduces probabilistic modeling to the encoding process. VAEs can be used to generate deepfakes by combining the latent representations of different individuals or sources. For example, one can swap the facial expressions of two individuals by exchanging their latent representations in the VAE.

C. Transfer learning and pre-trained models

Transfer learning is a technique that allows a pre-trained neural network to be fine-tuned for a new task with minimal additional training. This approach is beneficial for deepfake generation, as it enables developers to leverage existing models that have already learned the features and patterns of human faces, speech, or other relevant content.

By using pre-trained models, deepfake creators can save time and resources during training and achieve more realistic results. However, this also raises concerns about the accessibility of deepfake technology and its potential misuse as powerful pre-trained models to become more widely available.

III. Deepfake AI Examples

A. Deepfake in Entertainment industry

Film and television

Deepfakes have been utilized in the film and television industry for various purposes, such as bringing deceased actors back to life, de-aging actors, and replacing actors for creative or logistical reasons. This technology allows filmmakers to create visually stunning and realistic scenes without relying on expensive and time-consuming traditional CGI techniques.

Video games

In video games, deepfake technology can be employed to generate realistic characters, facial animations, and even in-game voice acting. By using AI-generated content, game developers can create more immersive and engaging experiences for players, with characters that closely resemble real humans.

Virtual reality and augmented reality

We know AI is helping the metaverse. Deepfakes can also play a role in virtual reality (VR) and augmented reality (AR) applications by enabling the creation of realistic virtual avatars and environments. Users can interact with AI-generated characters or even create their own custom avatars, enhancing the overall VR or AR experience.

B. News and journalism

Synthetic news anchors

Deepfake technology has been used to generate synthetic news anchors that present news stories and reports with lifelike appearances and speech. These AI-generated anchors can provide news organizations a cost-effective and efficient means to produce content while maintaining a consistent on-screen presence.

AI-generated articles and reports

Deepfakes can also be applied to generate written content, such as articles, reports, and opinion pieces. Using natural language processing algorithms, AI can produce content that closely mimics human-written text, potentially streamlining the news production process.

C. Social media and advertising

Influencers and virtual personalities

Deepfake technology has given rise to virtual influencers and personalities on social media platforms. These AI-generated characters can amass large followings and engage with audiences in a way that blurs the line between reality and fiction. Brands and advertisers are increasingly collaborating with virtual influencers for marketing campaigns and product endorsements.

Customized ad campaigns

Deepfakes can enable advertisers to create highly personalized and targeted marketing campaigns. By using AI-generated content, brands can tailor their ads to individual consumers, featuring specific products, messages, or even celebrity endorsements that resonate with their target audience.

D. Deepfake in Politics and misinformation

Disinformation campaigns

One of the most concerning applications of deepfake technology is its potential use in disinformation campaigns. Malicious actors can create deepfakes to spread false information, manipulate public opinion, or undermine political adversaries. This could have far-reaching consequences on the democratic process and public trust in media and institutions.

The Impact on Elections and public opinion

Deepfakes can influence elections and sway public opinion by creating fake videos or audio recordings of political figures making controversial statements or engaging in inappropriate behavior. This can erode trust in political candidates and contribute to a more polarized and divided society.

IV. The Ethics of Deepfakes in AI

A. The balance between creativity and privacy

AI is affecting us in both positive and negative ways. While deepfakes offer new opportunities for creative expression and innovation, they also raise significant privacy concerns. The technology allows for the creation of realistic images and videos featuring individuals without their consent, potentially infringing on their privacy and causing harm to their reputations. Striking a balance between the creative potential of deepfakes and the need to protect individual privacy is a critical ethical challenge.

B. Deepfakes as a tool for manipulation and disinformation

Deepfakes can be a powerful tool for spreading disinformation and manipulating public opinion. By creating convincing fake content, malicious actors can deceive audiences and undermine trust in media, institutions, and democratic processes. Addressing the ethical implications of deepfakes as a tool for manipulation requires a multifaceted approach, including public awareness, technological solutions, and regulatory measures.

C. Legal and regulatory frameworks

1. Intellectual property rights

Deepfakes can infringe on intellectual property rights by using the likeness, voice, or other elements of a person’s identity without permission. Existing copyright and trademark laws may not fully address the unique challenges posed by deepfakes, prompting a need for updated legal frameworks and guidelines that consider the implications of AI-generated content.

2. Privacy and defamation laws

Deepfakes can violate privacy and lead to defamation by falsely portraying individuals in damaging or harmful contexts. Legal frameworks must evolve to address these issues, determining the extent to which creators and distributors of deepfake content can be held responsible for the consequences of their actions. This may involve updating privacy laws and establishing clear legal standards for AI-generated content.

D. The role of technology companies and platforms

Social media platforms and technology companies are essential in reducing the harm deepfakes cause. These platforms can lessen the dissemination of malicious content and aid in maintaining the integrity of digital media by creating and implementing deepfake detection algorithms. They must also establish precise rules and regulations for producing and disseminating deepfake content, striking a balance between encouraging free speech and safeguarding users.

Companies and platforms ought to spend money on media literacy initiatives and educating users about deepfakes so that people can distinguish between actual and manipulated content. Technology companies can support the ethical use of AI-generated content while minimizing the risks associated with this technology by adopting a proactive and responsible approach to managing deepfakes.

V. Deepfake Detection and Mitigation

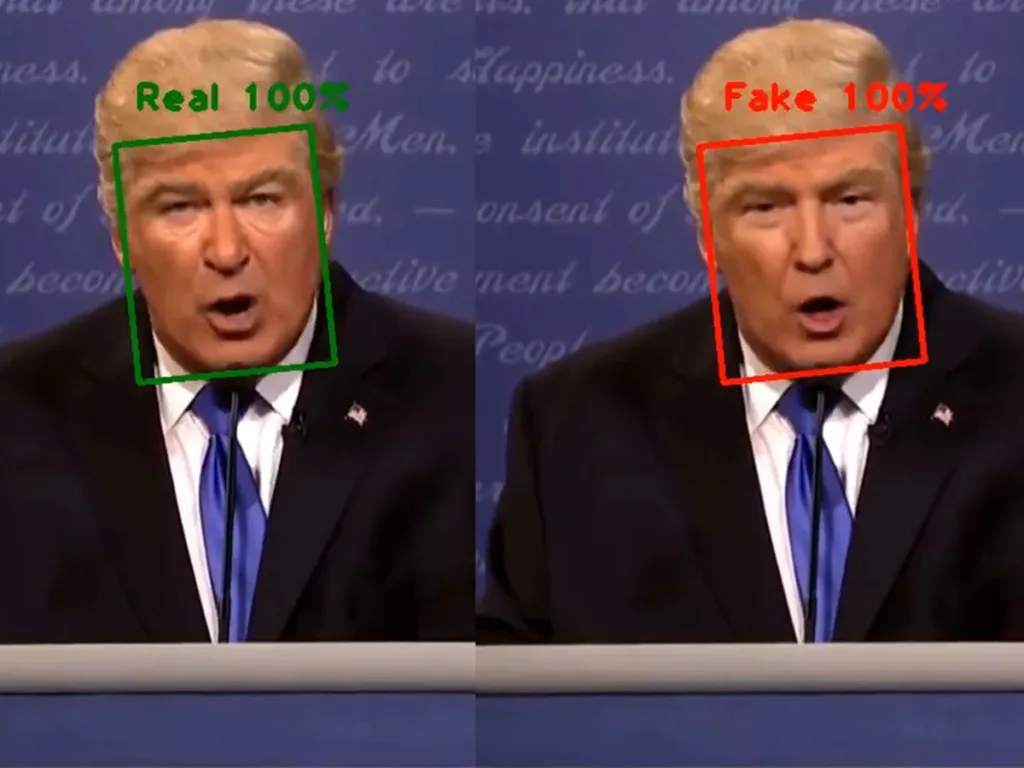

A. Traditional Deepfake detection techniques

Visual artifacts and inconsistencies

One approach to detecting deepfakes involves examining the content for visual artifacts and inconsistencies, such as unnatural lighting, distorted facial features, or irregular eye or mouth movements. These inconsistencies can be a sign of manipulation, indicating that the content may be a deepfake.

Audio analysis

Another method for detecting deepfakes involves analyzing the audio components of a video. This can include examining the synchronization between audio and visual elements, as well as identifying discrepancies in speech patterns or voice characteristics that may suggest manipulation.

B. AI-based Deepfake detection methods

Deep learning for deepfake identification

Deep learning algorithms can be used to identify deepfakes by comparing the characteristics of AI-generated content with those of authentic media. These algorithms can be trained to recognize subtle patterns and features associated with deepfakes, allowing them to detect manipulated content with high accuracy.

Adversarial training

Adversarial training is a technique in which deep learning models are exposed to both real and manipulated content during the training process. By learning to distinguish between genuine and fake media, these models can become more effective at identifying deepfakes.

C. The role of media literacy and public awareness on Deepfake

Educating the public about deepfakes and promoting media literacy is essential in mitigating the potential negative effects of this technology. By teaching people how to recognize manipulated content and encouraging critical thinking when consuming digital media, society can become more resilient to disinformation and deception.

VI. Conclusion

There are new openings and threats in many fields thanks to deepfakes and synthetic media. Monitoring the risks and ethical implications of AI-generated content as the technology develops is essential.

The potential benefits and positive applications of Deepfakes

There are legitimate reasons to be concerned about deepfakes, but there are also many positive uses for the technology. Among these are developments in the creative arts, targeted marketing, and new forms of entertainment. The benefits of this ground-breaking technology can be realized if deepfakes are used responsibly.

The success of deepfakes in the future will depend on how well we combine technological advancement with moral responsibility. We can work toward an end where the potential of deepfakes is harnessed for a good while minimizing the risks to individuals and society by developing robust detection and mitigation strategies, establishing legal and regulatory frameworks, and promoting media literacy.

FAQs

Deepfakes are not technically illegal, but they pose a risk to individuals and societies alike due to their ability to sow confusion and distort reality. A person receives intellectual property rights in exchange for their original work. This can be anything from a book or painting to a movie or even a piece of software.

Deepfakes began in 1997 with the Video Rewrite program, developed by Christoph Bregler, Michele Covell, and Malcolm Slaney. The program used existing video footage to create new content, such as someone mouthing words they did not say in the original version.

To function, a deepfake video requires artificial intelligence (AI) and machine learning models. The generative adversarial network (GAN) technique creates fake videos using one advanced machine learning model, and the AI analyzes the output to determine whether the video is genuine or not.

Marketers can save money on video campaigns by using deepfakes instead of in-person actors. Instead, they can buy a license for an actor’s identity, use previously recorded digital footage, insert the appropriate dialogue, and create a new video.

FakeCatcher captures blood flow signals from across a video subject’s face using PPG. The tool then converts those signals into spatiotemporal maps, which deep learning algorithms compare to human PPG activity to determine whether the video’s subject is real or fake.

Do you want to read more? Check out these articles.

1 comment