Introduction

Since OpenAI is trying to kill Google, Its announcement of the development of GPT-4, the next generation of their highly popular language model, has generated great excitement among many individuals working in the technology industry. However, safety professionals are urging caution due to the potential dangers associated with this newly developed artificial intelligence (AI) tool. In this article we will have a look at OpenAI chat GPT4 risks and concerns from experts point of view.

The most recent iteration of the model, GPT-3, has shown remarkable capabilities in various tasks, including natural language processing (NPL), text completion, and creative writing, among others. Despite this, it has also been the source of contention due to worries regarding bias, privacy, and the possibility of malicious applications. We have already seen the best practices of Chat GPT-3.

These concerns and risks will likely become more prominent once GPT-4 is available for free. It is anticipated that the new model will be even larger and more powerful than its predecessor and that it will have the ability to produce text that is even more convincing and realistic.

GPT-4 is Making Room For Malicious Actors

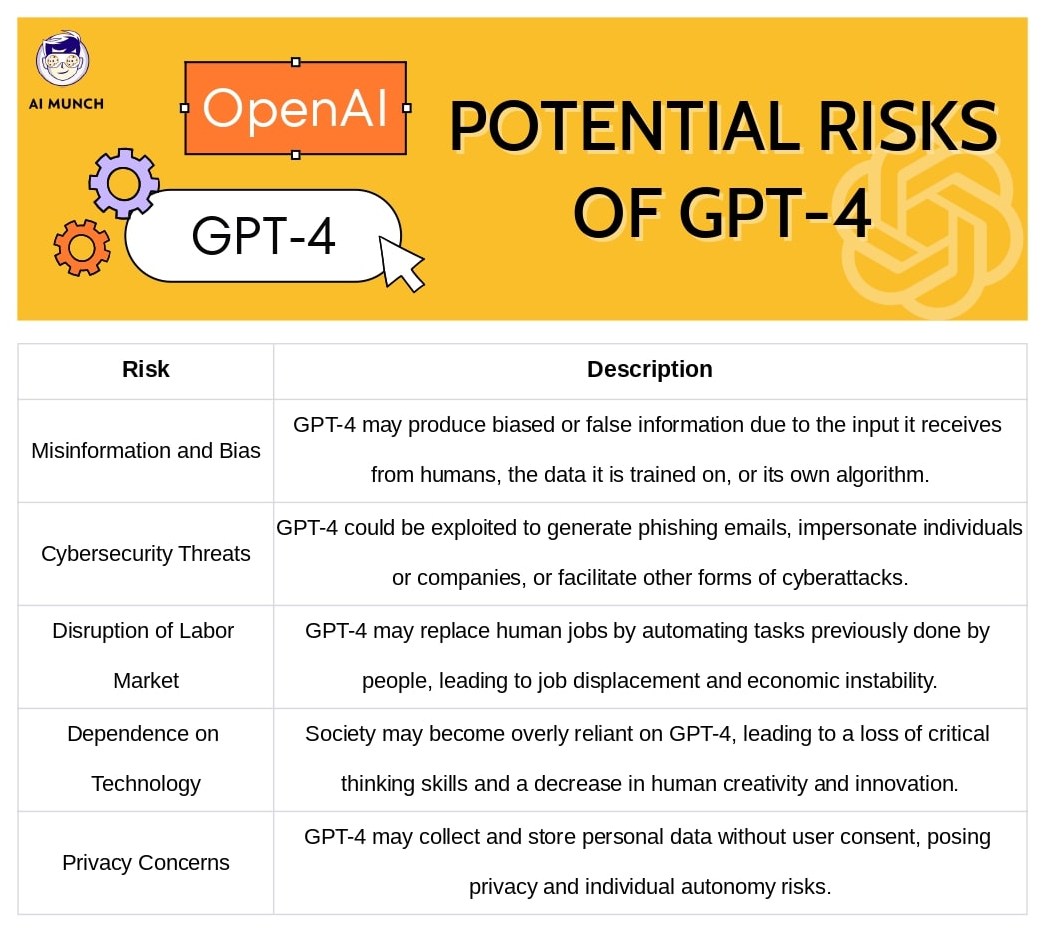

GPT-4’s potential utilization for malicious purposes is a significant worry. The model may be exploited to create fake news articles, phishing emails, or convincing deep fakes, resulting in financial and reputational harm to individuals and businesses and potentially affecting national security.

Another issue is the potential for bias to be ingrained in the model. GPT-3 has already been scrutinized for propagating damaging stereotypes and spreading false information. There is not much difference when we compare GPT-3 to GPT-4. If not addressed during GPT-4’s development, these biases may be amplified and further perpetuated in society.

Moreover, privacy is one of the major GPT-4 risks. The model requires a vast amount of data, including emails, social media posts, and other confidential information, to be trained. This raises concerns about data access, usage, and protection.

As Artificial Intelligence has a dark side, so Security experts urge greater transparency and accountability in creating and applying GPT-4. They recommend implementing measures to safeguard user privacy, prevent malicious use, and minimize bias.

GPT-4 and Security Issue: How to Stop?

One proposed solution is an independent regulatory body overseeing AI technology development and deployment. Such a body could establish ethical guidelines and ensure that AI tools are developed transparently and responsibly.

Another proposal is for companies like OpenAI to be more active in addressing these concerns. They could collaborate with experts and researchers to identify and mitigate potential risks, and engage in discussions with stakeholders to ensure that the advantages of AI are maximized while minimizing its potential negative impact.

While the development of GPT-4 represents a significant advancement in AI, it also presents significant risks. Security experts warn about possible malicious use, bias, and privacy issues. All stakeholders, including companies, governments, and individuals, must proactively address these risks and ensure that AI is developed and deployed ethically and responsibly. Only then can we fully leverage this powerful technology’s potential to benefit society.

OpenAI CEO Warns of Risks

Associated with ChatGPT and Similar AI Applications

Sam Altman, the CEO of OpenAI, the company that made ChatGPT, is worried about the wrong things this AI technology could do. He said (in an interview with ABC News) that regulators and society need to be involved in reducing these risks and ensuring the technology is not abused.

Of course, AI can help with cyber security matters, but still, Altman warned that as these models get better at writing computer code, they could be used to spread fake news or even launch aggressive cyberattacks. He stressed that the risks of this technology must be recognized and dealt with responsibly instead of being ignored or embraced without question.

OpenAI’s GPT-4 AI model poses great potential and Danger

OpenAI released GPT-4, the most recent iteration of its language AI model. This model has demonstrated impressive abilities by scoring 90% on US bar exams, and nearly perfect on high school SAT math tests. Sam Altman, CEO of OpenAI, cautioned about the risks that could come with AI technology, and he urged society and regulators to get involved to avoid bad outcomes for humanity. Despite widespread concerns that AI will replace people, Altman emphasized that AI is only operational with human input and control and warned that some users might not abide by safety precautions. Elon Musk, the CEO of Tesla, echoed Altman’s worries when he cautioned against the potential risks of artificial general intelligence (AGI).

Elon Musk voices concerns over lack of AI Regulation

while OpenAI warns of “Hallucinations Problem.”

Elon Musk, an outspoken supporter of regulating AI safety, worries about Microsoft’s plan to eliminate the department that runs ChatGPT on Bing and is in charge of monitoring ethics. “There is no regulatory oversight of AI, which is a major problem,” he tweeted in December. In another tweet this week, Musk wondered what would be left for humans to do. Sam Altman from OpenAI warned about a “hallucinations problem” with GPT-4’s most recent iteration. Altman raised the concern that users may confidently assert made-up facts because the model relies on deductive reasoning rather than memorization. He emphasized that the technology should be viewed as a reasoning engine rather than being seen as a fact database.

Compared to AI, progress with Neuralink will be slow and easy to assess, as there is large regulatory apparatus approving medical devices.

— Elon Musk (@elonmusk) December 1, 2022

There is no regulatory oversight of AI, which is a *major* problem. I’ve been calling for AI safety regulation for over a decade!

Conclusion

We’ve talked about the release of OpenAI’s GPT-4 language AI model and its potential risks and limitations. We discussed OpenAI CEO Sam Altman’s concerns about the dangers of AI and the need for regulation and safety limits. We also discussed Elon Musk’s concerns about the lack of regulatory oversight over AI and the potential loss of human jobs in the future. Furthermore, we investigated GPT-4’s capabilities and limitations, such as its deductive reasoning abilities, hallucination problem, and improved performance over its predecessor, GPT-3.

Just like we have seen GPT-3 tools, Soon we will also see chat GPT-4 AI tools. Overall, it is clear that while GPT-4 significantly improves capabilities, it also introduces potential risks and limitations that must be addressed. AI must be carefully regulated and watched as it is made and used so that it doesn’t have unintended effects and to ensure that society is safe and healthy.

FAQs

The first model was trained with information from the internet, like news stories, books, websites, etc. Human AI trainers also put data into ChatGPT to make the responses more human-like and conversational, such as providing conversation examples from both a user and an AI assistant.

Performance enhancements. GPT-4 answers are more factually correct than GPT-3.5 answers. The number of “hallucinations,” or errors in fact or reasoning made by the model, is reduced, with GPT-4 scoring 40% higher than GPT-3.5 on OpenAI’s internal accurate performance benchmark.

It is more imaginative and coherent than GPT-3. It can handle more extended pieces of text as well as images. It’s more accurate and less likely to make “facts” up. Its capabilities open up a slew of new possibilities for generative AI.

GPT-3.5 is limited to about 3,000-word responses, while GPT-4 can generate responses of more than 25,000 words.

Because GPT-4 is a large multimodal model (emphasis on large), it can accept text and image inputs and output human-like text. For example, you could upload a worksheet to GPT-4, and it could scan it and output answers to the questions.